A Teacher/coach Can Create an Optimal Coaching Environment by:

Reinforcement Learning Coach environments with Cartpole and Atari Optimized by OpenVINO Toolkit

Introduction

In this article, we will introduce Reinforcement Learning Coach and see how it is utilized as a framework for implementing Reinforcement Learning scenarios. Finally we will wait at a machinery through which we connect Reinforcement Learning Double-decker with OpenVINO toolkit. The main application of using OpenVINO toolkit is to optimize the models created during the Reinforcement Learning grooming process. Later doing the optimization process nosotros in turn will use inference engine to return the awarding on different Intel targeted systems and then that we can visualize the entire trained simulation. We will see how different parameters are used in Reinforcement Learning Jitney.

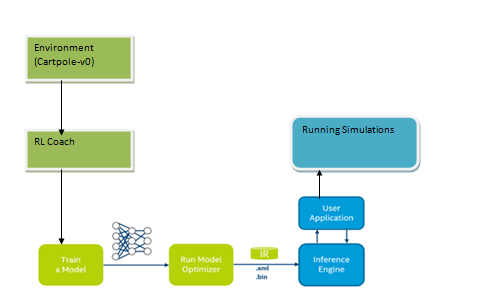

The side by side figure shows the entire process.

Intel Distribution of OpenVINO Toolkit utilization

one) Generate the *.xml and *.bin file (IR) from Model Optimizer of OpenVINO Toolkit.

2) Intel OpenVINO helps us starting time to create the required *.xml and *.bin from the checkpoint files saved while training in Reinforcement Learning Coach.Next using the inference engine from OpenVINO toolkit we are able to focus on the concluding simulation reached at nearly adequate simulation(We are capturing gifs of multiple checkpoints as the Reinforcement Learning Coach does the training,as we tin can tell from the fourth dimension spent at training that the last step it performed was the accepted one we can show this part as the stable role of our simulation performed)for Cartpole environs for stability and showcase that part of simulation.

System Requirement

1) Ubuntu sixteen.04

2)16 GB ram

iii) 4th Generation Intel processors and beyond.

Running experiments on RL Coach

Everything we do in Reinforcement Learning Autobus is using some experiment or other.

One of the nigh important parts on running the experiment is using a pre-set mechanism.

Preset uses predefined experiment parameter.

Preset allows usa to brand interaction betwixt agents and the environment much easier and with a process to apply unlike parameter to make job easier for the states.

Reinforcment Learning passenger vehicle is very like shooting fish in a barrel to apply from the terminal window.The steps, as we will meet are very piece of cake to empathise and follow along.

i.east.

omnibus –p Now subsequently that we need to pass in the environment in which nosotros would be implementing Bus framework.The environments mentioned are cypher merely simulations which be trained using different values of double-decker.

For environments nosotros will look at Atari every bit well equally Cartpole environments.

Installation of Reinforcement learning Coach

For walkthrough we need the same version of python that is 3.5 and Tensorflow 1.11 as i did.It is mentioned in the documentation too that nosotros need to accept Python 3.5 installed using Ubuntu xvi.04 and with lot of trials and expriments I found that Tensorflow 1.11 is the supported i.

First of all we need to have only Python 3.5 installed

One more essential function

We volition have to install the verbal version TensorFlow installed nothing more or less than that. The version is one.11.0

First of all install anaconda customer for Ubuntu 16.04

Installation of Anaconda IDE

Nosotros can utilise curl to download the version of the Anaconda ide.

curl -O https://repo.continuum.io/archive/Anaconda3-v.0.1-Linux-x86_64.sh

Afterwards that nosotros demand to run the script

bash Anaconda3–five.0.1-Linux-x86_64.sh

We will hit ENTER to continue.

Nosotros volition have to approve license term to yeah.

Then a prompt volition come we will hit enter to accept the installation in default location.

Adjacent information technology volition ask to add conda to the path we need to click on yeah.

Creation of Anaconda Python 3.5 surround

In the next step nosotros will install python 3.5 in an Anaconda environment.

conda create -northward py35 python=3.5 anaconda After successful installation we need to activate the environment.

source activate py35 Installation of pre-requisites for Reinforcement Learning Coach

Major function of installation and the pre-requisites are divers and shown footstep wise in the Reinforcement Learning Coach link.You tin have a look,I am sharing the link below.

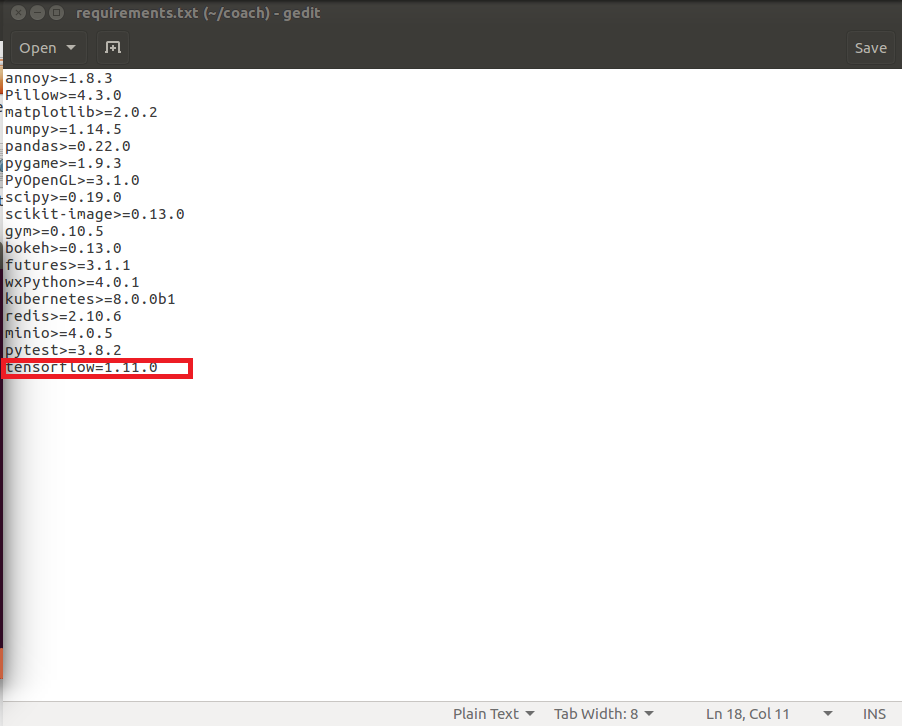

Tweaking the requirements.txt file.

On tweak we need to make in the requirements.txt file the dependency for TensorFlow needs to exist setup every bit

TensorFlow == 1.xi.0

Then we will run pip3 install –r requirements.txt

This updated txt file will take care of the dependency and install the required version of TensorFlow that is 1.11.0

Note:-

To install Coach 0.eleven.0 we should accept

Python 3.five

TensorFlow 1.eleven.0

At present nosotros volition utilize the post-obit command inside the cloned folder that is "jitney"

pip3 install -e . This will install Coach.

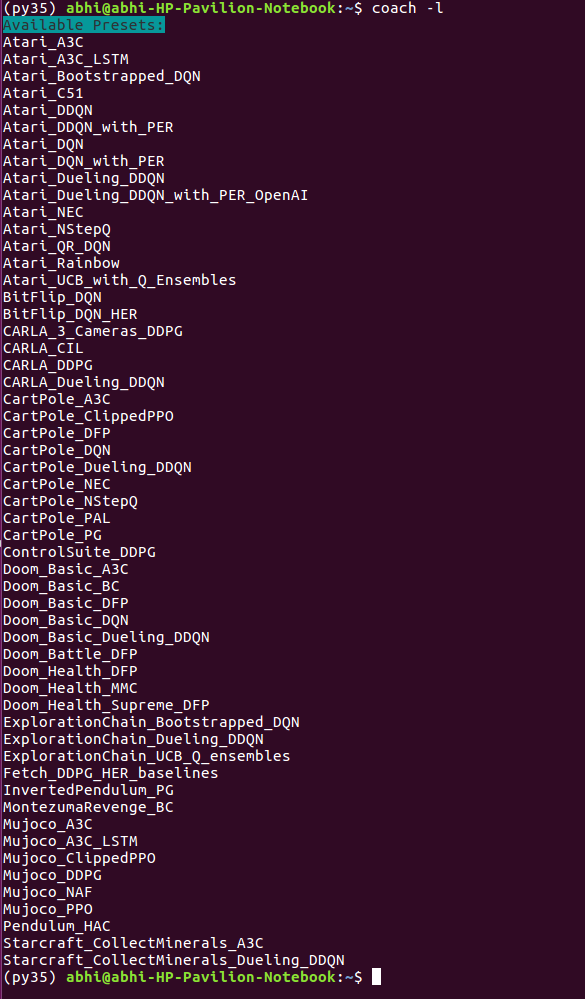

After installation Bus we will check the presets bachelor the control to check information technology is

coach –l Permit usa look at some case environments for simulations that we will be working on.

Cartpole –v0

Cartpole — known also every bit an Inverted Pendulum is a pendulum with a middle of gravity above its pivot point. It's unstable, just can be controlled past moving the pivot betoken nether the center of mass. The goal is to go on the cartpole balanced by applying appropriate forces to a pivot bespeak.

The Cartpole environment scenario for RL Charabanc

A pole is attached by an un-actuated joint to a cart, which moves along a frictionless track. The system is controlled by applying a force of +1 or -i to the cart. The pendulum starts upright, and the goal is to foreclose it from falling over. A reward of +1 is provided for every time step that the pole remains upright. The episode ends when the pole is more fifteen degrees from vertical, or the cart moves more than than 2.4 units from the eye.

More details can be plant below

Breakout Game environment

using deep reinforcement learning, nosotros implement a system that could acquire to play many classic Atari games with man (and sometimes superhuman) performance.

More details can be plant here in the link beneath.

using charabanc -l shows us all the presets available in RL Coach

OpenVINO Toolkit Optimizer process

Now nosotros will look at open wine toolkit. The major thing that needs to be covered is how the TensorFlow checkpoint file are accessed in open vino toolkit. The model optimizer does the job for us with TensorFlow framework. Allow'southward look at it now.

First of all we need to install the pre-requisites for TensorFlow.

Inside the folder

<INSTALL_DIR>/deployment_tools/model_optimizer/install_prerequisites We need to run the shell script.

install_prerequisites_tf.sh Equally we volition be saving the file in *.meta format we take to follow the following procedure.

In this instance, a model consists of three or four files stored in the same directory:

model_name.meta model_name.index model_name.data-00000-of-00001 (digit function may vary) checkpoint (optional)

To catechumen such TensorFlow model:

Go to the <INSTALL_DIR>/deployment_tools/model_optimizer directory

Run the mo_tf.py script with a path to the MetaGraph .meta file to convert a model:

Nosotros have only shown the process it volition implemented when we create the checkpoint.

Permit'southward go back to the omnibus surroundings once more.

To run a preset we will accept to utilize

jitney -r -p <preset_name> The parameter –r is used for rendering the scene while training.

The most important command that we need in society to integrate Reinforcement Learning coach with OpenVINO is to use it to relieve training procedure later on a definitive fourth dimension interval which saves checkpoints for the undergoing preparation.

omnibus –s 60 "using –s"

Checkpoint_save_secs

This allows coach to save checkpoint for the model.We can also specify the time.This is indeed important considering we know charabanc uses the TensorFlow backend. This checkpoint files are directly referenced in open vino toolkit as we can create optimized model from information technology.

Folders

At that place is a specific way how the training process is being saved on for whatever matter in our local PC when we run the double-decker. Everything that happens with the bus preparation and fourth dimension interval save process is saved in Experiments folder.

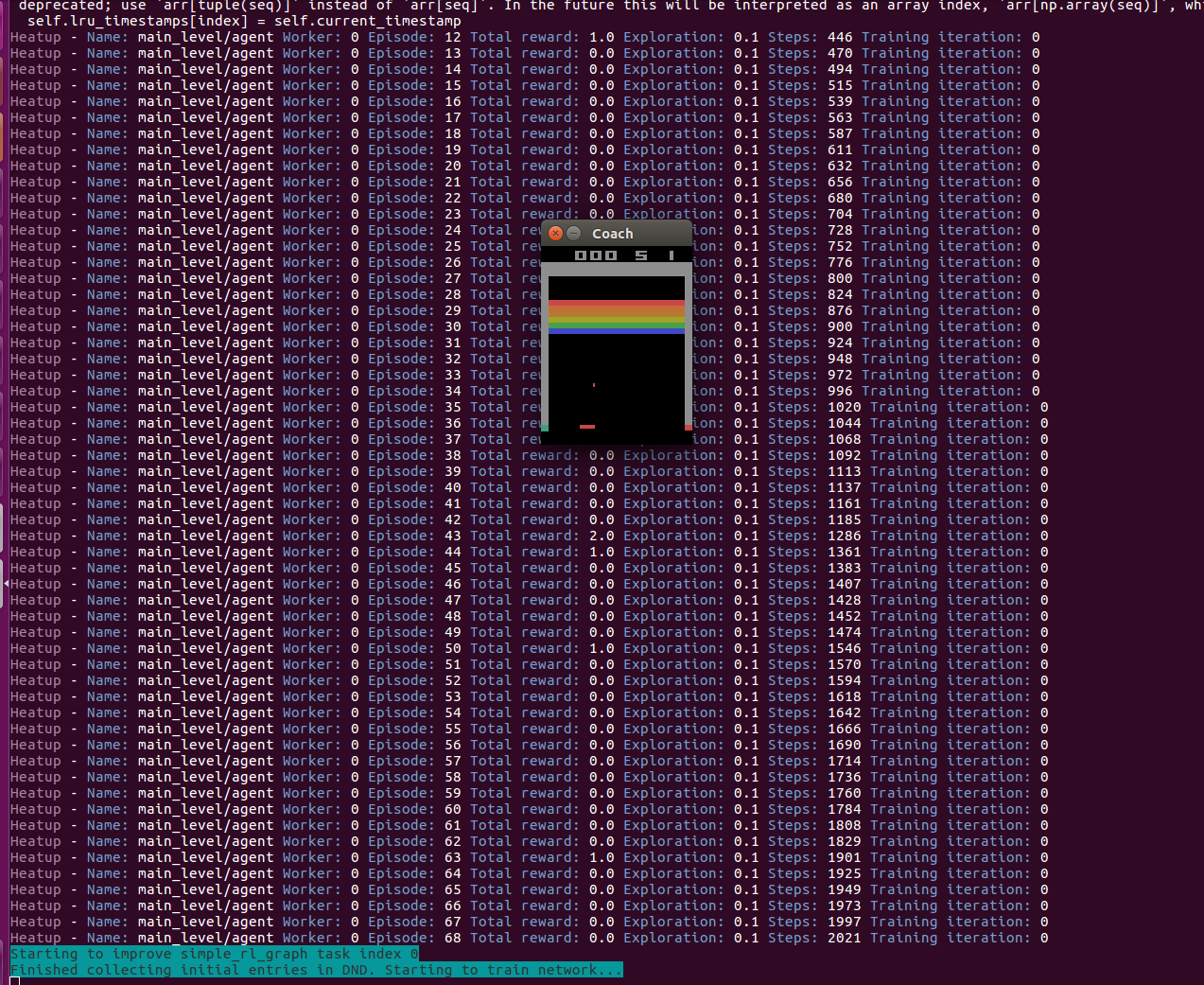

Reinforcement Learning Coach preparation process with an environment

In this department we will outset a training process with Reinforcement Learning Charabanc and save the checkpoint for utilisation for the Model Optimizer. The training environment used hither is Breakout.

Permit us start the preparation process for Reinforcement Learning coach on Atari game with level as breakout.

(py35) abhi@abhi-HP-Pavilion-Notebook:~$ coach -r -p Atari_NEC -lvl breakout -s 60 Please enter an experiment proper noun: Atari_NEC Creating graph — proper noun: BasicRLGraphManager Creating agent — proper noun: agent Alert:tensorflow:From /home/abhi/anaconda3/envs/py35/lib/python3.5/site-packages/rl_coach/architectures/tensorflow_components/heads/dnd_q_head.py:76: calling reduce_sum (from tensorflow.python.ops.math_ops) with keep_dims is deprecated and will be removed in a future version. Instructions for updating: keep_dims is deprecated, use keepdims instead simple_rl_graph: Starting heatup Heatup — Name: main_level/agent Worker: 0 Episode: 1 Total advantage: one.0 Exploration: 0.1 Steps: 52 Training iteration: 0 Heatup — Name: main_level/amanuensis Worker: 0 Episode: 2 Full advantage: 0.0 Exploration: 0.ane Steps: 76 Preparation iteration: 0 Heatup — Proper noun: main_level/amanuensis Worker: 0 Episode: 3 Total reward: 0.0 Exploration: 0.i Steps: 98 Grooming iteration: 0

We save the model periodically after 60 steps

We will now see how nosotros used the OpenVINO Toolkit. The saved model is accessed through the OpenVINO toolkit.

(py35) abhi@abhi-HP-Pavilion-Notebook:/opt/intel/computer_vision_sdk_2018.four.420/deployment_tools/model_optimizer$ python mo_tf.py — input_meta_graph ~/experiments/Atari_NEC/17_01_2019–03_29/checkpoint/0_Step-605.ckpt.meta Model Optimizer arguments: Mutual parameters: - Path to the Input Model: None - Path for generated IR: /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/model_optimizer/. - IR output proper name: 0_Step-605.ckpt - Log level: SUCCESS - Batch: Non specified, inherited from the model - Input layers: Not specified, inherited from the model - Output layers: Non specified, inherited from the model - Input shapes: Non specified, inherited from the model - Mean values: Not specified - Scale values: Non specified - Scale factor: Not specified - Precision of IR: FP32 - Enable fusing: True - Enable grouped convolutions fusing: True - Move mean values to preprocess section: False - Reverse input channels: Simulated TensorFlow specific parameters: - Input model in text protobuf format: False - Offload unsupported operations: False - Path to model dump for TensorBoard: None - List of shared libraries with TensorFlow custom layers implementation: None - Update the configuration file with input/output node names: None - Utilise configuration file used to generate the model with Object Detection API: None - Operations to offload: None - Patterns to offload: None - Use the config file: None Model Optimizer version: 1.iv.292.6ef7232d

An XML and a bin is generated by Model optimizer that can exist used for inference for later fourth dimension.

Inferring using our model

Equally nosotros have generated the xml and the bin for final inference we accept to share them with parameter –m the path to the xml bin file generated besides as using the Algorithm for the Reinforcement learning arroyo using –i pick so that we can run the simulation with best performing checkpoints from the Reinforcement Learning coach that is generated with build target setup for CPU.

./rl_coach -one thousand <xmlbin path> -i <algorithm> -d CPU ./rl_coach -m 0060.xml -i NEC -d CPU

As we run the inference we will be able to pull upward the best possible event for balancing for Cartpole or the breakout game.We are saving a gif for each reaults that nosotros get then in this case the best consequence of all the gifs file is shown.

The Cartpole balancing act before and afterwards the training process afterwards inference beingness implemented.

The gifs below bear witness the progress before grooming and later training.

After Training

Decision

In the first part of the commodity nosotros have seen how Reinforcement Learning Coach works

We have touched on installation process and pre-requisites

With principles related to Reinforcement Learning we have touched on dissimilar experiment scenarios.

The experiment scenarios that we have used for simulation are the Cartpole

Using Reinforcement Learning Double-decker we found an acceptable model for the simulation process and the checkpoint.

These checkpoint optimal results were in plough converted to intermediate reference (IR) using Intel Distribution of OpenVINO toolkit Optimization process.

Using Inference engine we generated the visualization for best optimized simulation.

Nosotros accept used OpenVINO Toolkit with Reinforcement Learning Motorbus to show the simulation for the experiment.

The article gives us scope to work on different experiment with Reinforcement Learning Motorbus and generate different optimized simulations for it.

Source: https://medium.com/intel-software-innovators/rl-coach-environments-with-cartpole-and-atari-optimized-by-open-vino-toolkit1-6088349bf657

0 Response to "A Teacher/coach Can Create an Optimal Coaching Environment by:"

Post a Comment